Personal Voice: A triumph (and a request)

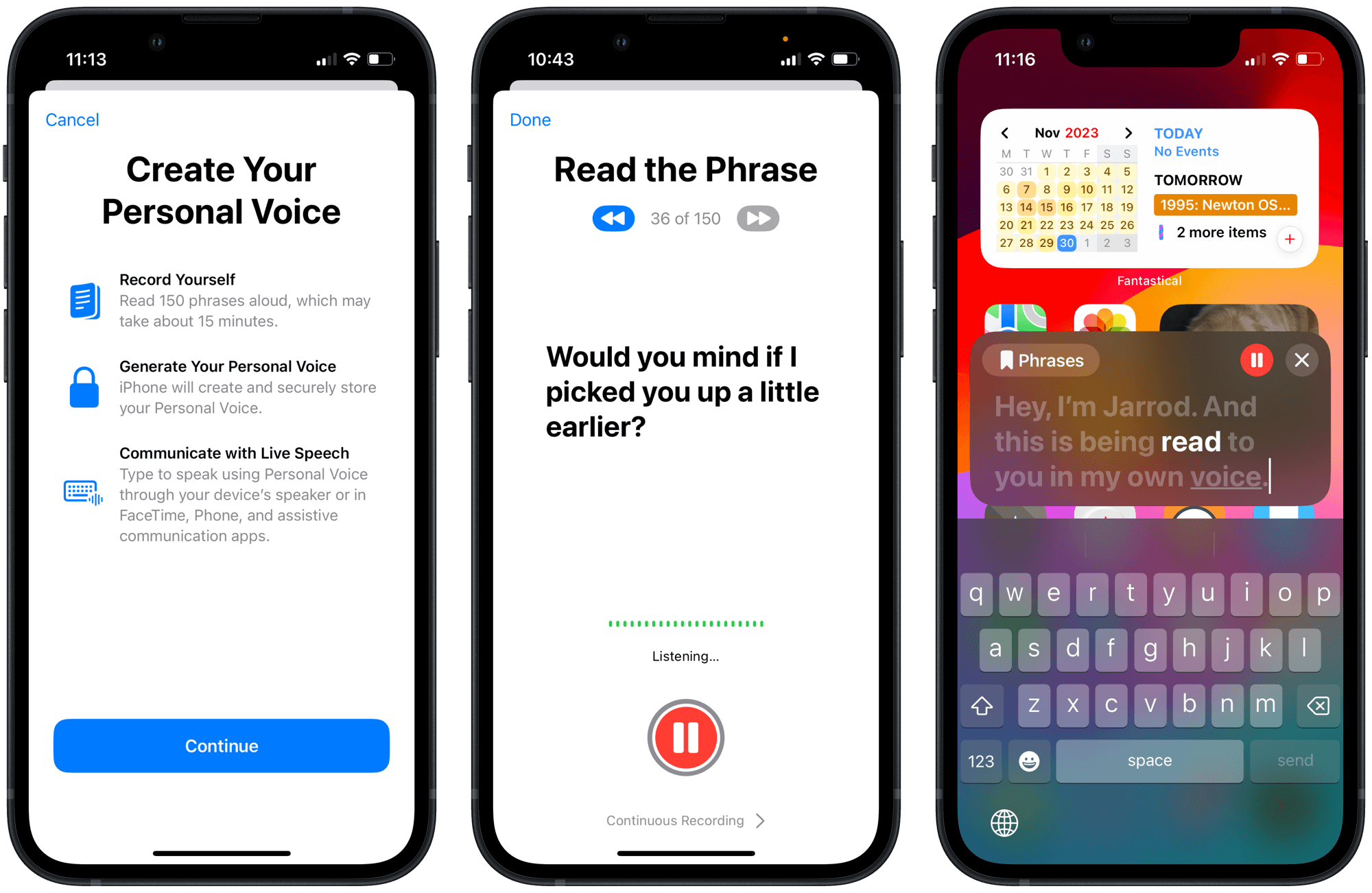

I finally got around to creating a Personal Voice on my iPhone the other day. I had big plans for it, and was thrilled it only took 15 minutes of recording, and then one overnight of processing — I thought it’d take like an hour, and then days of waiting. Guess what, it’s pretty accurate, too!

If you haven’t heard of Personal Voice, it was introduced as a way for people with degenerative diseases like ALS to preserve their unique voice and use it to “speak” aloud with it using their devices. Apple happened to put out a Newsroom article about it today:

This fall, Apple launched its new Personal Voice feature, available with iOS 17, iPadOS 17, and macOS Sonoma. With Personal Voice, users at risk of speech loss can create a voice that sounds like them by following a series of text prompts to capture 15 minutes of audio. Apple has long been at the forefront of neural text-to-speech technology. With Personal Voice, Apple is able to train neural networks entirely on-device to advance speech accessibility while protecting users’ privacy.

Paired with Live Speech, another new feature from this fall, users can hold ongoing conversations in a replica of their own voice:

Live Speech, another speech accessibility feature Apple released this fall, offers users the option to type what they want to say and have the phrase spoken aloud, whether it is in their Personal Voice or in any built-in system voice. Users with physical, motor, and speech disabilities can communicate in the way that feels most natural and comfortable for them by combining Live Speech with features like Switch Control and AssistiveTouch, which offer alternatives to interacting with their device using physical touch.

The Newsroom article was more than just a reminder about Personal Voice and Live Speech, though. As an entry posted under the new Stories branding, there was an even more personal side to it. Apple spotlit Tristram Ingham, an accessibility advocate, who has a condition which “causes progressive muscle degeneration starting in the face, shoulders, and arms, and can ultimately lead to the inability to speak, feed oneself, or in some cases, blink the eyes.”

Ingham created his Personal Voice for Apple’s “The Lost Voice,” in which he uses his iPhone to read aloud a new children’s book of the same name created for International Day of Persons with Disabilities. When he tried the feature for the first time, Ingham was surprised to find how easy it was to create, and how much it sounded like him.

That children’s book is the subject of the short film, ‘The Lost Voice’, that Apple made to accompany this story, and, spoilers, Ingham narrates it using his Personal Voice and Live Speech.

It’s a touching film, and an especially relevant article to read this week considering it’s International Day of Persons with Disabilities this Sunday (a fact I didn’t realize until seeing the MacStories article about Apple’s Newsroom feature).

I’m not currently at risk of losing my voice, but I’m certainly sympathetic to those who are. Here’s Ingham on the importance of having a digital voice that captures your unique intonations and mannerisms:

“Disability communities are very mindful of proxy voices speaking on our behalf,” Ingham says. “Historically, providers have spoken for disabled people, family have spoken for disabled people. If technology can allow a voice to be preserved and maintained, that’s autonomy, that’s self-determination.”

I applaud Apple on having a guiding vision for this set of features. Certainly it’s fun for someone like me to be able to play around with an on-device voice model, but for many other people, it can be the difference between retaining part of their identity or having yet another bit of it snatched from them by the unforgiving jaws of a cruel disease.

Frankly, it’s astounding that we have the power to make a replica of our voice using nothing but the device that already lives in our pocket. I consider the marriage of microphone quality, software UX, machine learning models, and neural engine cores coming together to make this feature possible a true triumph.

Also, you never know when you might need it. I (also) encourage everyone to spend the few minutes it takes to have a replica of their voice ready, just in case.

Finish the job with your own apps, please, Apple

About those “big plans” for my Personal Voice that I mentioned earlier. For years, I’ve dreamed of automatically creating an audio version of my blog posts, read in my own voice. We talk about a writer’s writing voice being distinctive, but I think there’s something extra special about hearing their written words read aloud in their verbal voice. Ben Thompson has this dialed with his Stratechery articles released both in text form, and read by him in a podcast. I don’t have the time, tools, or bandwidth to record a bespoke podcast for each of my silly blog posts. But I could create a close approximation with Personal Voice and Shortcuts.

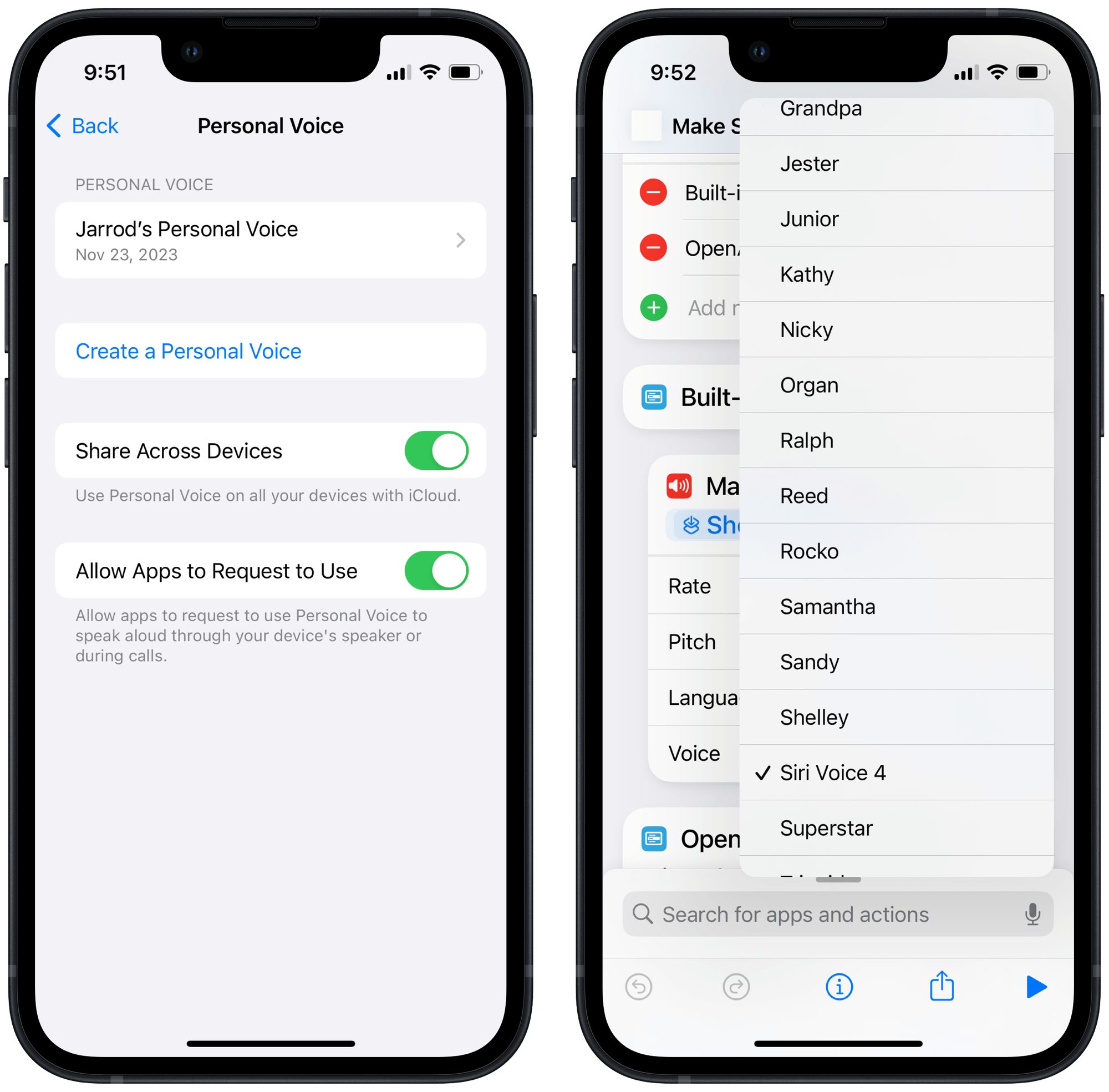

Or, at least, I’d like to, but Shortcuts lacks the ability to use my Personal Voice with its actions. I was so sure that my Personal Voice would appear as an option in the ‘Speak Text’ and ‘Make Spoken Audio from Text’ actions once it was finished processing. I even had a shortcut built out, ready to start producing narrated versions of my blog posts. Why was I so convinced? Because the Personal Voice pane in Settings provides a specific option to let other apps use your voice. Certainly Shortcuts, the first-party Apple app that has hooks into all kinds of settings and that is the poster child for extending Accessibility features into complex workflows that can’t be accomplished any other way, would be the first to request use of my voice. Alas, it appears to have slipped through the cracks.

My dream will have to wait. But getting this included is far more important than my inconsequential audible blog post idea. Shortcuts’ integration with the rest of the system features makes it way more powerful and able to handle nuanced situations.

Having some saved phrases in Live Speech is great, but imagine having dictionary full of common phrases you use, able to be contextualized and surfaced base on location, calendar events, or Focus mode.

Pasting or typing text into the Live Speech field is useful for ongoing conversations, but what if you wanted to save that favorite bedtime story, read in full in your voice, as an audio file that your granddaughter could listen to, again and again, long after you’re gone?

With Shortcuts and Personal Voice, those ideas could easily be reality, able to be harnessed by billions of people around the world.

In the meantime, I’ve laid out this case in a Feedback (FB13427747) to Apple and hope we’ll see Personal Voice get the extensibility it deserves very soon.