Fans of Apple’s iPhone Upgrade Program — the one where you pay monthly for an iPhone and have the opportunity to upgrade to a new model after a given number of payments — rejoice! You can now get a similar deal for an iPad Pro. And when I say “deal”, I really mean it. More on that in a bit.

You might remember the folks at Upgraded have offered a MacBook Upgrade Program for a while now. I used it to purchase this very M4 MacBook Air that I’m typing to you on, and have been super happy with the experience. Their website is crazy-easy to use, setting up the loan very quick, and AppleCare+ is included.

People who like to be on the cutting edge have been asking for Apple to offer this kind of program for products other than the iPhone for years. Upgraded beat them to it for the Mac. And now they have expanded their lineup to include the iPad Pro, lapping Apple in their own game! Here’s how it works, according to their emailed announcement:

Right now, it’s just the iPad Pro models — but the experience works just like our MacBook program. Seamless, flexible, and future-ready. […]

Buying an iPad Pro with us is just like buying a MacBook:

- Pay monthly over 36 months.

- Plans start at $31.89/month for the 11-inch, or $40.78/month for the 13-inch.

- After 24 payments, you can upgrade to a new model or finish the last 12 payments to pay it off.

- If you upgrade, we’ll send a prepaid return box. Just transfer your data, send the old iPad back, and we’ll refurbish it for its next life.

I mentioned that the program is great for folks who want the latest and greatest, but I think it’s equally appealing to anyone who wants or needs to pay off their gadgets over time. If you’re happy with your iPad after two years, just hang onto it, pay it off, and it’s yours to keep!

Neat! But you said they’re lower than Apple’s own pricing?

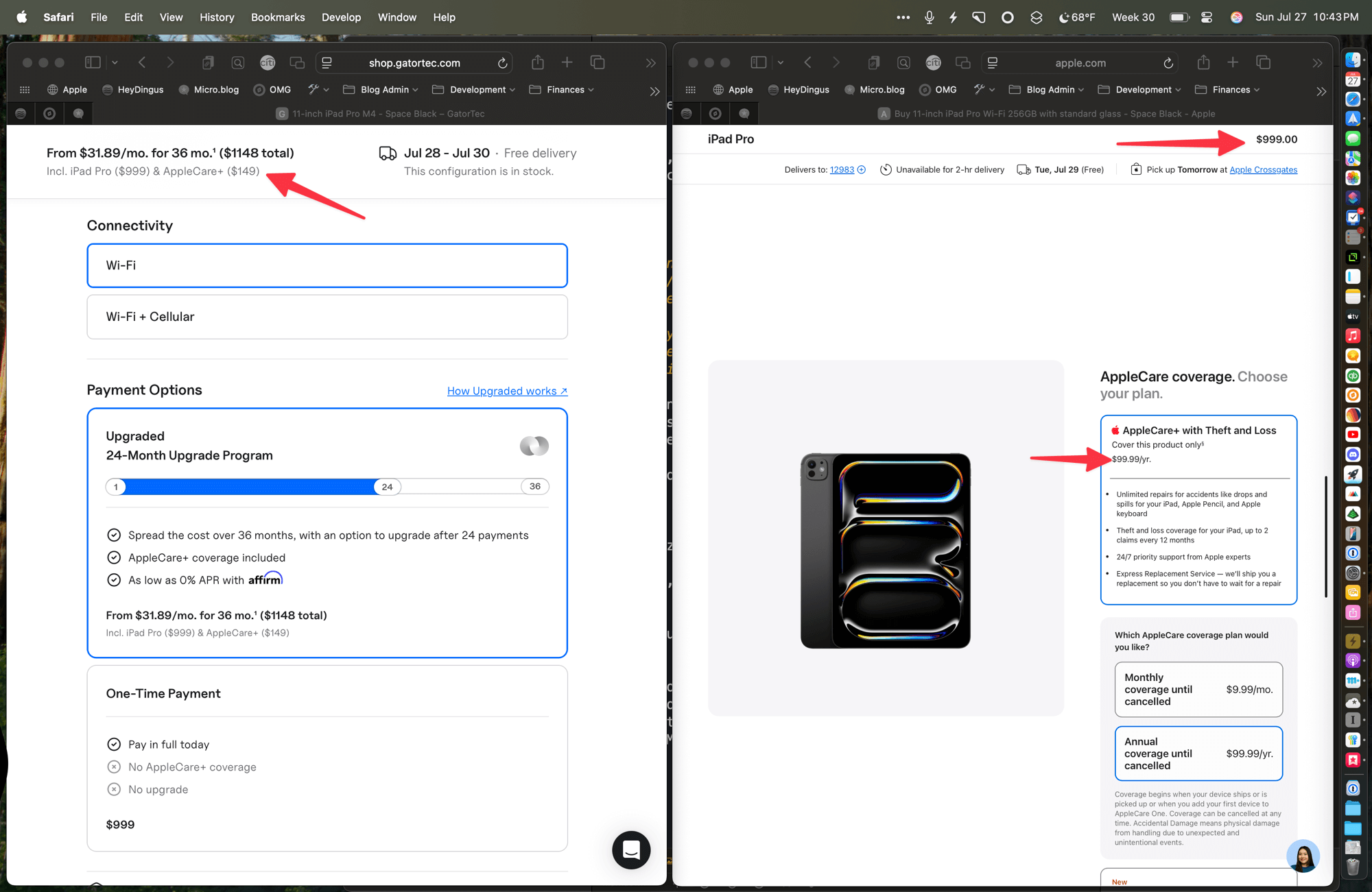

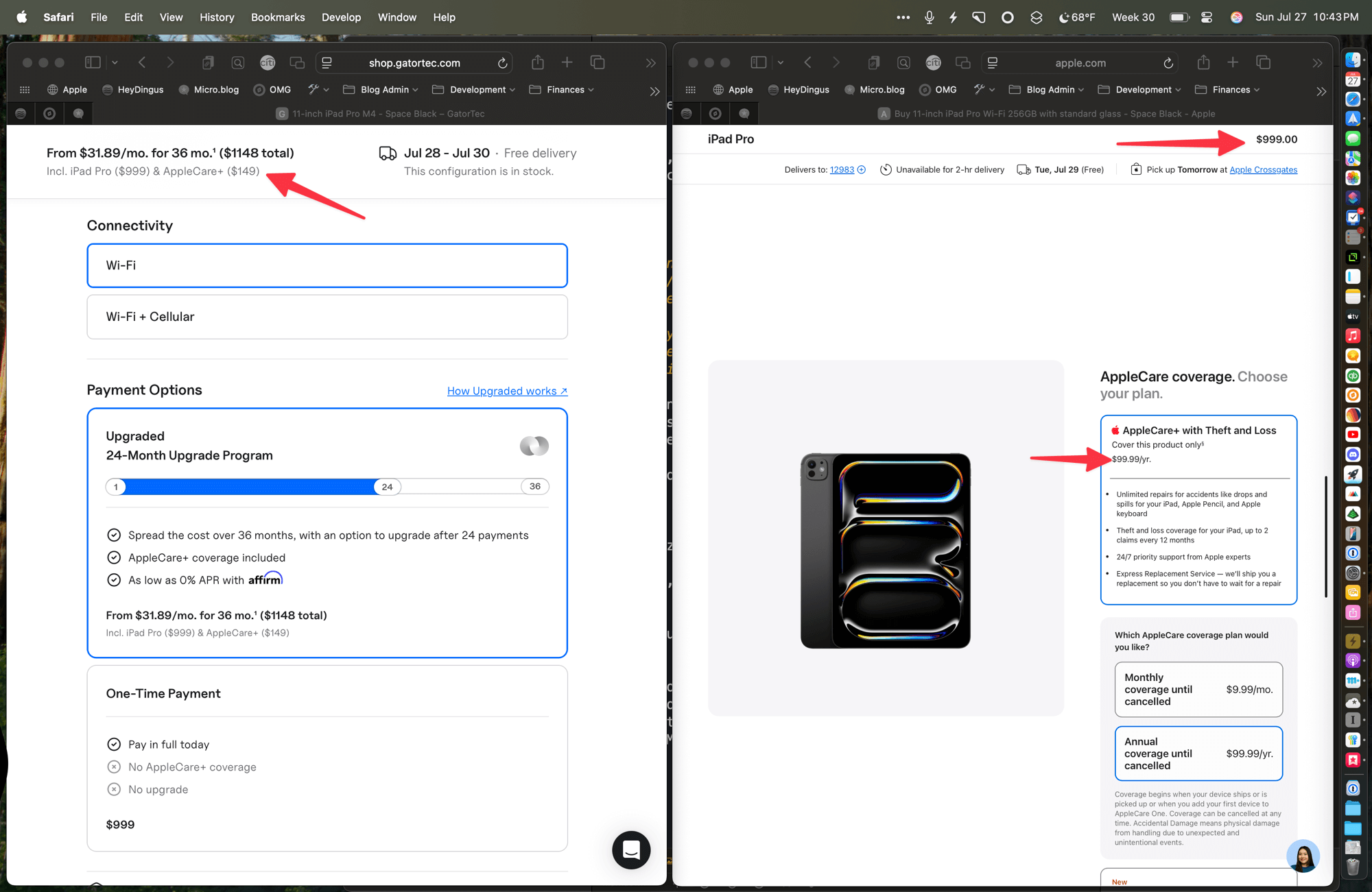

Yep! Crazy, right? But here is is in black and white:

iPad Pro, 11-inch, Wi-Fi, 256GB, Space Black

- Upgraded: $999 for the iPad + $149 one-time payment for AppleCare+ = $1148

- Apple: $999 for the iPad + $99.99/year for AppleCare+ (3 years for $299.97) = $1298.97

As you can see, Upgraded has Apple beat by about $150.

Upgraded’s checkout on the left, Apple’s on the right. ⌘

Upgraded’s checkout on the left, Apple’s on the right. ⌘

Whoa! How is that possible?

How? I’m not sure. But I think it’s because they lined all of this up before Apple’s recent reshuffle of AppleCare+.

I heard that Apple’s monthly and annual pricing went up as they eliminated any AppleCare without Theft & Loss Protection. And I think you used to be able to buy AppleCare for iPads with a one-time payment for a set period (two years?). But that’s no longer an option. It’s now monthly, yearly, or bust.

Even if Upgraded’s AppleCare coverage is only for two years — when you can swap to a new model — instead of three, they’ve still got Apple beat by $50.

The Debt Ceiling

Now, for my MacBook Air purchased through Upgraded, I make monthly payments with 0% interest. That interest rate depends on how Affirm, which manages the loan, judges your credit, so I can’t guarantee that you’ll get that same rate. It may not be as worthwhile if you’re paying a higher interest rate. I don’t link raising my personal debt ceiling, so I probably wouldn’t have sprung for the deal if I was paying extra in interest. But it is absolutely possible to not pay any extra, and in fact pay less than what Apple charges, and have the opportunity to easily upgrade to a newer model in a couple of years.

What will they think up next?

I expect Upgraded will have their hands full for a while expanding to include Mac desktops and other iPad models. But I think they’ve started with the right products.

But what do I want them to offer next?

First of all, the iPhone. I’m not on one of the major cellular carriers, which means I can’t get a phone through Apple’s iPhone Upgrade Program. So, personally, I’d be geeked if Upgraded could offer iPhones on their upgrade program. I kind of doubt it since they’re not a carrier, but maybe!

Next on my list would be the Apple Watch. It’s the only other bit of tech that I feel compelled to upgrade every few years, if just for the battery life improvements and additional sensors they keep packing into newer models. My original Apple Watch Ultra is showing its age, and I’m excited to upgrade it this year. If I could do so at a flat, monthly rate and know that I could easily swap it out for a new one again in a few years if I wanted to, I’d definitely jump on board. Fingers crossed.

Disclosure

I don’t have any sort of affiliation with Upgraded, but they did offer me a one-time discount on my MacBook Air purchase earlier this year. It was unprompted, and basically done as a thank you for all the customers I had sent their way after I first wrote about them last year. I probably would have purchased my MacBook through Upgraded even without the discount just so I could pay it off over time. And now that I’ve actually tried their service, I can wholeheartedly recommend it.